Microsoft Teams Local Media Optimization or LMO for short is an update to what was called Media Bypass. To first understand what LMO exactly is and how this hugely benefits enterprise telephony deployments, we must first spend a few minutes on it’s elder, Media Bypass.

Understanding Media Bypass and it’s role in Enterprise Voice

Media Bypass in Microsoft Teams is a feature benefit of Direct Routing. In cases where the PSTN connectivity is closer to the client computer making the call vs the Microsoft Cloud it makes sense if there was a way for the voice traffic to take the shortest and by assumption, the most optimal path. Logically, this would most probably be between the client and the PSTN gateway the call is to be routed through.

By default, voice traffic, or media as we commonly call it will travel from the Teams client to Media Relay servers in Microsoft Azure and from there routed over the Microsoft Teams backbone, out to PSTNHUB (Microsoft Teams Direct Routing Head-Ends) and back down to the PSTN gateway.

In regional deployments where the PSTN gateway is remote to the client, perhaps in a Datacenter in some other country, sending media via the Microsoft Teams Media Relay servers and utilising the Microsoft Azure CDN makes the most sense and so by default, the configuration is optimal and fit for purpose.

However, when that PSTN gateway is at the same local office as the client making the call, the media traffic is taking a convoluted path from on-prem to the cloud and back on-prem when it could remain local. Enter the feature Media Bypass. Media Bypass does exactly what it says, it bypasses the Relay and goes straight from the client to the PSTN gateway.

How Media Bypass Works

In order for Media Bypass to work, both the client and the PSTN Gateway need to be able to discover each other during call setup. This typically means that traffic should be routable directly between the client and the SBC with no port blocking on the required media ports.

Teams discovers the destination and port by using an RFC 5245 protocol called ICE-Lite. ICE is a communication establishment protocol that allows two devices to exchange media behind internet NAT routers / firewalls.

ICE opens up specific ports attached and attaches to all possible IPs that can route to the client. These are called candidates. The first is always the private IP address of the client e.g. 192.168.1.10:5000. If the client is internet connected, it can make a STUN request to a Teams STUN server that discovers the client’s public IP and opened firewall port e.g. 89.129.134.15:58193. This then becomes a second valid path to send media to the client.

Why is this the internet important when the PSTN Gateway is local? The simple reason is that the call controller i.e. the service that handles messaging (SIP and REST) lives in the cloud. This service will pass the messages between client and SBC and therefore is unable to handle private network addresses. The call controller will always remain in the call messaging (signalling path).

With PSTN calls the path that is always chosen is the internet candidate for the client because a local candidate is invalid for PSTN calls.

Once both the SBC and the client have agreed on the Internet based ICE candidate to exchange media between them, the media is established and does not leave the network, cutting the cloud out of the media path completely, hence media bypass.

The Media Bypass Problem

Hopefully, you can start to see why perhaps media bypass was not that well adopted by enterprises with Microsoft Teams? It is predicated on the fact that both the client and more importantly the SBC must have a public IP.

With the SBC, this must be a dedicated Public IP with 1:1 NAT. This causes enterprises significant network compatibility and security issues because in most cases where media bypass would make sense, the SBC is in a remote office several network segments downstream from their consolidated internet egress at a regional datacenter.

Firstly the technical challenge of acquiring a dedicated IP for each SBC at the respective internet firewall. Secondly the security implications of NAT’ing a publicly accessible IP through a perimeter security appliance all the way down to a Corpnet local subnet is practically impossible to get signed off.

The solution to that security problem is to create another by requesting local internet breakout at the site. This is a hard sell because it is now introducing another penetration point and requires another set of security tools and governance around that, which meant this option was never really considered viable.

But let’s say that these challenges where overcome, there remains one more and probably more critical implication of implementing media bypass in this form and that is the media would need to be hairpinned on the untrusted side of the NAT firewall because it is routed using Internet ICE candidates, not private IPs of the client and SBC.

Consider the regional perimeter firewall scenario. This would mean the media would need to be routed out of the local site, over the corporate WAN to a datacenter, to the perimeter, hairpinned and sent right back to where it came from. The configuration alone makes the effort seem not worth it.

A Related Topology Problem

Now is probably a good time to digress a little and discuss another issue that is not linked to media bypass directly, but shares some of the problems associated with having local PSTN gateways that is perhaps a bigger problem in all of this.

Going back to 1:1 Public IP assignment. There is significant security concerns in punching holes through firewalls to dozens of on-prem SBCs to use them as Direct Routing Egress points. Each NAT, each device is a potential risk and if one is compromised, it gives access to the entire network.

Security best practice dictates that you should minimise attack surfaces, so having one, may be two egress points that can serve multiple internal routes / destinations makes much more sense and from a topology perspective easier.

The Right Direct Routing Solution

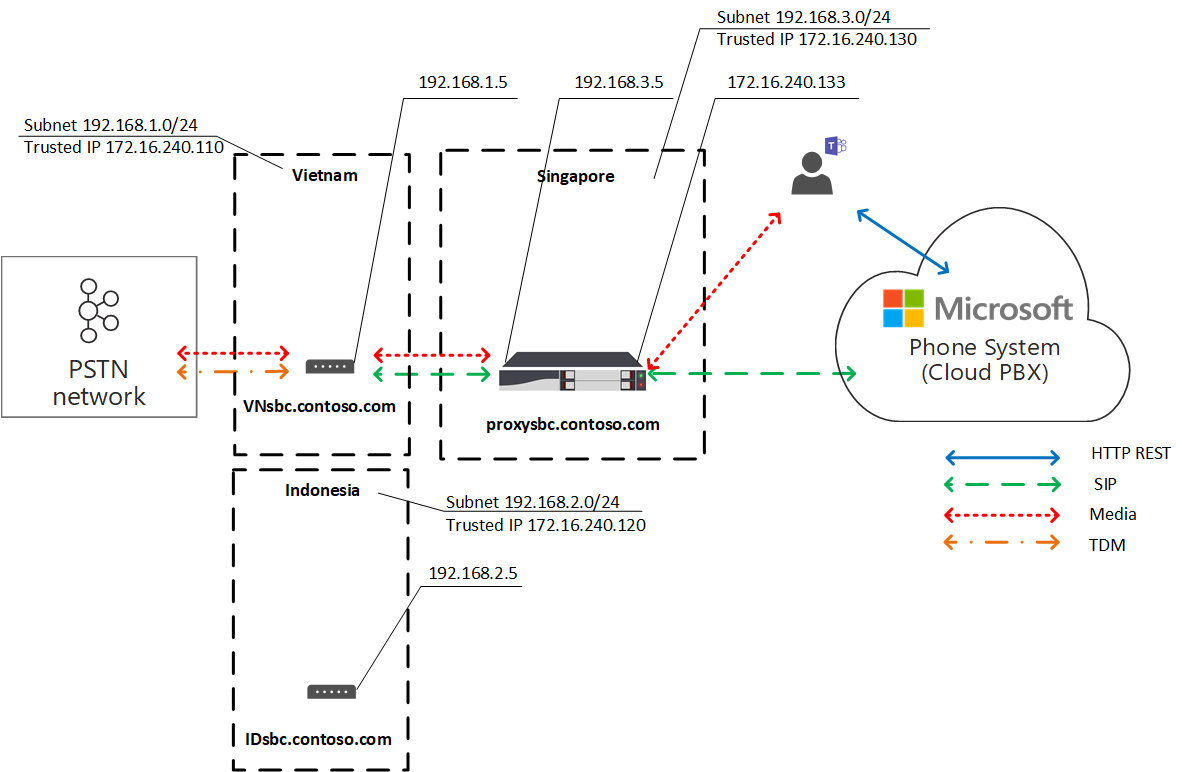

The right way to connect multiple local branch PSTN gateways to Microsoft Teams Direct Routing is to use regional SBCs sitting in your perimeter datacenter. The ideal place is Microsoft Azure. You can deploy two for redundancy in Azure with all the protections and scale that comes with to create a secure egress for all your Teams to local SBC traffic.

The major benefit for deploying Azure based SBCs is that the traffic never actually leaves yours or the Microsoft network where you have Express Route or an Azure VPN in place. Meaning end to end you have a private voice network (to some degree).

In this topology you’d connect all your local gateways to the regional SBCs using private IPs (assuming on-net end to end connectivity) and your regional SBCs to Teams PSTNHUB.

One massive benefit is that its likely there would be limited to no security involvement for onboarding internal SBCs to the regional ones, no network configuration beyond the initial Azure connection to do, so on-ramp would be much quicker.

However, it does make media bypass impossible to deploy.

Local Media Optimization Solves This

Microsoft Teams Local Media Optimization allows you to implement the preferred topology of cloud based egress SBCs (Proxy SBCs essentially) and still provide media bypass to the local SBC and client.

In a nutshell, Local Media Optimization allows the client and local SBC to establish media using their private IP addresses without Microsoft Teams being aware of the local SBC in it’s topology. This means you do not need to add the local SBC as a PSTN Gateway in Teams Direct Routing Configuration.

This is a massive feature that is much needed for enterprise telephony looking to utilise the Microsoft Cloud and this will give way to more in-branch survivability options in the future.

For more information on Local Media Optimization, please refer to the official documentation: https://docs.microsoft.com/en-us/microsoftteams/direct-routing-media-optimization